The coming AI reckoning: Why Wall St. is mispricing the largest unreported risk in modern tech

What is the revenue model for AI?

- It is not social media.

- It is not “we will build it and they will come.”

- It is not Facebook, Instagram, or YouTube, where one influencer reaches millions and advertising makes money.

AI is a 1:1, intimate, high-touch service, not a 1-to-millions broadcast system.

- Advertising doesn’t scale.

- Subscriptions plateau.

- “Flat-rate AI” is a fantasy: capex, inference cost, GPU cycles, liability, and regulatory exposure make that model impossible.

In other words:

You are investing billions in something you cannot monetize — and trust will collapse long before scale arrives.

Why? Because:

No memory → no trust

No trust → no adoption

No adoption → no scale

No scale → no revenue

Nobody was afraid of Facebook when they signed up.

Nobody feared Twitter, LinkedIn, or YouTube.

You cannot say that about AI.

The fear is justified — because the architecture is broken

- hallucinations

- mirages

- fabricated citations

- false medical advice

- five wrongful death suits

- deepfakes

- not enough electricity to power the growing number of CPU cycles

- compliance virtually impossible under potentially conflicting requirements of HIPAA/COPPA/FERPA/KOSA, etc.

- 14,000 Amazon employees cut and replaced with AI

- rising fear of the Luddite cycle repeating

This is not hype.

This is structural.

LLMs today do not forget safely, do not remember reliably, and do not improve based on governed, verified user input.

They use RAG (Retrieval-augmented generation) to compress, discard, and hallucinate to survive finite memory limits. These limits are a given. They cannot be iterated away.

So LLMs use compression, like JPEG, which is a “losssy“ compression scheme. In JPEG, what are lost are pixels. In LLMs, what are lost are facts. And just like JPEG, when you look closely at LLM responses, you can see what's missing.

Look closely at a JPEG, and you’ll see the missing pixels. Look closely at LLM responses, and you’ll see what is missing: Truth.

Without reliable memory, AI cannot be trusted. Without trust, AI cannot scale. And without scale, the market caps tied to AI infrastructure evaporate.

The exposures are staggering over the next 3-5 years:

• 25–50% of Microsoft’s $4T valuation tied to AI

• 20–45% of Alphabet’s $3T valuation tied to AI

• 15–30% of Apple’s $3T valuation tied to AI

These valuations assume:

- adoption

- compliance

- safety

- revenue

- insurance

- regulatory approval

None are guaranteed. Most are not possible without a governed, loss-less architecture.

This is the largest unpriced risk in modern tech.

The three-step case Wall Street has not heard (but will feel)

1. No metering → no pricing power

If you cannot meter access, volume, or quality at the boundary, every AI feature collapses into:

“all-you-can-eat” → ARPU → zero

Fraud, scraping, automated misuse, and unbounded consumption destroy margins.

Metering is not optional.

Metering is the product.

2. No receipts → no scale

Without tamper-proof, regulator-ready logs:

- general counsel cannot face regulators

- insurers cannot underwrite

- enterprises cannot deploy

- plaintiffs’ lawyers can run wild

Every blocked action, every override, every escalation must generate a cryptographically signed receipt.

No receipts = no enterprise contracts.

3. No compliance → no regulated customers

FTC, HIPAA, COPPA, FERPA, KOSA, GLBA, EU AI Act.

All potentially conflicting.

All mandatory.

All impossible under today’s architecture.

Without governed memory, governed forgetfulness, governed learning, and governed last-gate checks, no enterprise or public-sector adoption is safe.

If AI cannot comply, revenue cannot materialize.

What management must demonstrate to prove a real revenue model

Boards and investors should ask CEOs of LLM companies:

“How do you meter? How do you turn meters into SKUs? And how do you produce tamper-proof receipts after an incident?”

The answer must include:

- boundary metering (access, volume, quality)

- a kill switch with receipts

- a compute-free mechanism for reconciling conflicting regulations

- SKU logic tied to safety gates and audit lanes

- a memory layer that never loses data and never fabricates

Right now, none of the major LLM vendors can answer this question honestly.

What exists today is an expense, not a product

Executives keep saying:

“We’ll monetize later.”

Later than what?

• regulatory tolerance

• insurer patience

• shareholder runway

• public trust

• scrutiny in the House and the Senate

AI without meters, receipts, and regulatory lanes is not a product.

It is an unbounded liability line.

I’ve already engineered and filed a working revenue model with USPTO

Boundary-metered, governed AI

- receipts

- value-based compute allocation

- governed learning loops

- tamper-proof audit packets

= a viable, regulator-safe revenue engine

This is not a deck.

This is not theory.

This is filed with USPTO.

White-paper-grade backgrounds exist at:

It is called AI$. It is self-evident. It is inevitable.

If enterprises need mechanics-level disclosure, counsel-only review can be arranged.

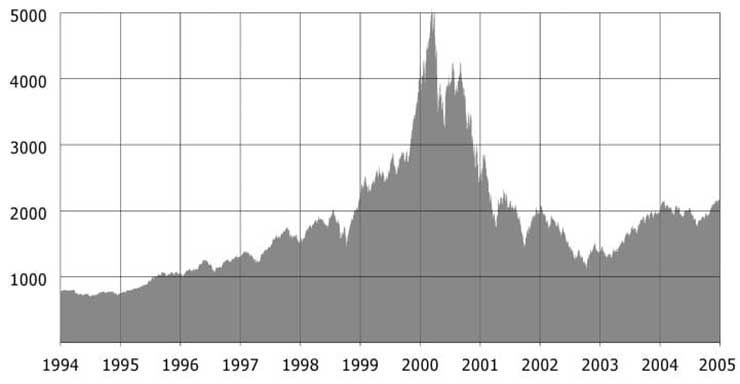

The unspoken truth: The market is pricing AI like electricity. It is much closer to GMOs.

Just a little bad press made genetically modified foods toxic.

AI is on the same trajectory.

The lawsuits are here.

The hearings are here and here.

The regulatory orders are coming.

The fractures in the architecture are already here.

This is the AI reckoning.

And Wall Street is not ready.

My name is Alan Jacobson. I'm a web developer, UI designer and AI systems architect. I have 13 patents pending before the United States Patent and Trademark Office—each designed to prevent the kinds of tragedy you can read about here.

I want to license my AI systems architecture to the major LLM platforms—ChatGPT, Gemini, Claude, Llama, Co‑Pilot, Apple Intelligence—at companies like Apple, Microsoft, Google, Amazon and Facebook.

Collectively, those companies are worth $15.3 trillion. That’s trillion, with a T—twice the annual budget of the government of the United States. What I’m talking about is a rounding error to them.

With those funds, I intend to stand up 1,400 local news operations across the United States to restore public safety and trust. You can reach me here.